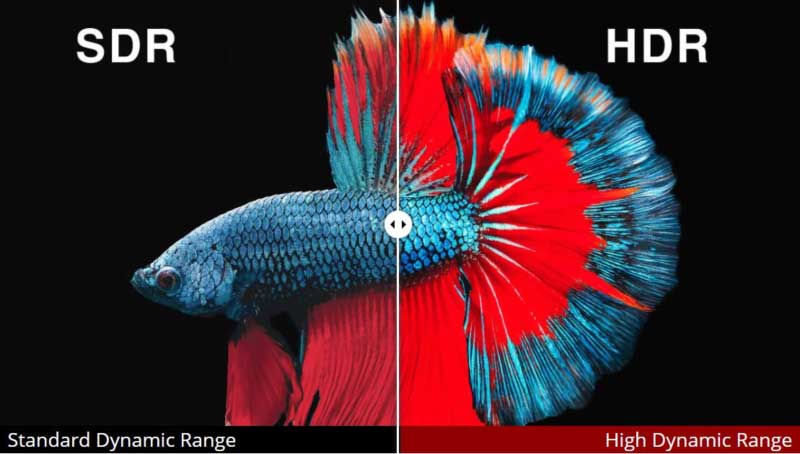

High Dynamic Range (HDR) is the next generation of color clarity and realism in images and videos. Ideal for media that require high contrast or mix light and shadows, HDR preserves the clarity better than Standard Dynamic Range (SDR).

Continue reading to learn more about HDR technology (and get a handy checklist for making the switch). If you’d like to take a look at our range of monitors designed specifically for color accuracy, click here.

High definition, high security, high speed – in nearly every context, ‘high’ indicates elevation “to the next level”.

High Dynamic Range (HDR) is no exception. Superseding the standard dynamic range of SDR, HDR has acquired more and more buzz in recent years.

Chances are you have probably already heard about High Dynamic Range and how it’s going to take your viewing experience “to the next level”. However, since HDR is still a fairly new technology, some people are still not quite clear about how it actually works.

So, what is HDR exactly? How does it differ from SDR? Why does it matter to you?

For the answers to these questions, take a look at our HDR vs. SDR comparison guide below to find out how HDR works and why it’s here to stay!

What is HDR?

HDR is an imaging technique that captures, processes, and reproduces content in such a way that the detail of both the shadows and highlights of a scene are increased. While HDR was used in traditional photography in the past, it has recently made the jump to smartphones, TVs, monitors, and more.

So what does this mean for you? This means images have more overall detail, a wider range of colors, and look more similar to what is seen by the human eye when compared to SDR (Standard Dynamic Range) images.

For the purposes of this article, we will focus on HDR video content. To understand high dynamic range, we must first understand how standard dynamic range works.

Dynamic Range in Images

In most images you will come in contact with there will be brighter parts and darker parts of the image that both contain displayable detail. When an image is overexposed, information in the brighter part of the image will be lost; likewise, when an image is underexposed information in the dark parts of the image will be lost.

Dynamic range is the range of information between the lightest and darkest part of an image, also known as an image’s luminosity. An image with a high dynamic range is an image with a mix of dark and bright attributes in the same image. Sunrises and sunsets are a good example of images with high dynamic range.

Dynamic Range on Your Monitor

When a monitor has a low contrast ratio or doesn’t operate with HDR, it is common to see colors and detail in an image being “clipped” as a result of the monitor’s display capabilities. As we mentioned earlier any information that has been clipped will be lost and therefore cannot be seen. When a monitor is trying to produce a scene with a wide range of luminance, this problem becomes even more pronounced.

HDR remedies this problem by calculating the amount of light in a given scene and using that information to preserve details within the image, even in scenes with large variations in brightness. This is done in an attempt to create more realistic-looking images.

HDR vs. SDR Compared

SDR, or Standard Dynamic Range, is the current standard for video and cinema displays. Unfortunately, it is limited by its ability to only represent a fraction of the dynamic range that HDR is capable of. HDR, therefore, preserves details in scenes where the contrast ratio of the monitor could otherwise be a hindrance. SDR, on the other hand, lacks this aptitude.

To put it simply, when comparing HDR vs. SDR, HDR allows you to see more of the detail and color in scenes with a high dynamic range. Another difference between the two lies in their inherent measurement.

For those of you familiar with photography, dynamic range can be measured in stops, much like the aperture of a camera. This reflects adjustments made to the gamma and bit depth used in the image and will differ depending on whether HDR or SDR is in effect.

On a typical SDR display, for instance, images will have a dynamic range of about 6 stops. Conversely, HDR content is able to almost triple that dynamic range, with an average approximate total of 17.6 stops. Because of this, a dark scene may see dark grey tones becoming clipped to black, while in the bright scene some colors and detail in that part of the scene may become clipped to white.

Which Factors Affect HDR?

When it comes to HDR, there are two prominent standards used today, Dolby Vision andHDR10. We’ll go into the differences between each of those below.

Dolby Vision

Dolby Vision is an HDR standard requires monitors to have been specifically designed with a Dolby Vision hardware chip, which Dolby receives licensing fees for. Dolby Vision uses 12-bit color and a 10,000 nit brightness limit. It’s worth noting that Dolby Vision’s color gamut and brightness level exceed the limits of what can be achieved by displays being made today. Moreover, the barrier to entry for display manufacturers to incorporate Dolby Vision is still high due to its specific hardware support requirements.

HDR10

HDR10 is a more easily adoptable standard and is used by manufacturers as a means of avoiding having to submit to Dolby’s standards and fees. For example, HDR10 uses 10-bit color and has the capability to master content at 1000 nits of brightness.

HDR10 has established itself as the default standard for 4K UHD Blu-ray disks and has also been used by Sony and Microsoft in the PlayStation 4 and the Xbox One S. When it comes to computer screens, some monitors from the ViewSonic VP Professional Monitor Series come equipped with HDR10 support.

HDR Content

It’s an easy mistake to make, especially if you own an HDR television, that all content is HDR content. Well, this is not the case; not all content is created equally!

To provide a relevant example, if you own a 4K television, you won’t be able to benefit from the 4K detail unless the content you’re watching is also in 4K. The same goes for HDR, in that in order to enjoy it, you’ll need to ensure that your viewing material supports such an experience.

So where do you find HDR content?

Currently, HDR is content is available in a number of ways. In terms of streaming, Netflix supports HDR on Windows 10 and Amazon Prime has jumped onto the HDR bandwagon as well. In terms of physical content, there are HDR Blue-ray disks and players available for purchase along with the built-in players on the Sony PlayStation 4 and the Microsoft Xbox One S gaming consoles.

Is your setup capable of displaying HDR?

Once you’ve got your HDR content cued up, whether it be HDR video or and HDR game, you’ll have to make sure your setup is capable of displaying that HDR content.

The first step is making sure that your graphics card supports HDR.

HDR can be displayed over an HDMI 2.0 and DisplayPort 1.3. If your GPU has either of these ports then it should be capable of displaying HDR content. As a rule of thumb, all Nvidia 9xx series GPU’s and newer have an HDMI 2.0 port, as do all AMD cards from 2016 onward.

As far as your display goes, you’ll have to make sure that it too is capable of supporting HDR content. HDR-compatible displays must have a minimum of Full HD 1080p resolution. Products like the ViewSonic VP3268-4K and VP2785-4K are examples of 4K monitors with HDR10 content support. These monitors also factor color accuracy into the equation in an attempt to make sure that on-screen images look as true to life as possible.

Is HDR a good investment for the future?

Throughout the history of any given medium, the standard for technology is always changing.

In music, vinyl records became CDs, which then became mp3s in turn. In home videos, VHS became DVDs then Blue-Rays. In terms of televisual resolution, simply, 480i morphed into 720p and 1080i over time. The pattern here is clear.

In the case of the latter-most example, high definition televisions did not become available in the United States until 1998, and popular until five to eight years thereafter. Today, Full HD resolution is commonplace throughout the country, with 4K UHD acting as the new fringe option.

The current modern battle between HDR vs. SDR reflects the same trend. Although HDR has been commonplace in the photography world for some time, it is the new kid on the block in terms of television and monitors. Some have even gone so far as to argue that 1080p HDR looks better than 4K SDR!

If you’re considering a leap to HDR, you may be wondering: Is HDR a good investment? Will High Dynamic Range technology actually take off?

While of course, nothing is ever 100% certain, HDR technology has fortune in its favor. Currently, its inherent technology is tied closely to that of ultra-high definition resolution, otherwise known as 4K.

Since 4K is being adopted by the general market with remarkable ease and speed, it stands to reason that HDR will follow the same course going forward. We can compare HDR vs. SDR all day but whether or not HDR is good for you will ultimately come down to your own personal experience. For now, feel free to explore ViewSonic’s range of HDR-compatible ColorPro monitors and or dive deeper into the world of color correction and color grading.

Luckily for all of the early adopters out there, HDR products are not hard to come by. The benefits of HDR even extend into gaming by allowing you to see more detail in your games for a more realistic feel.

For more on gaming monitors with HDR, you can check out the ViewSonic XG3220 and XG3240C, which are equipped with gaming-centric features and HDR compatibility.